Securing Fast and Slow – From Reactive Incidence Response to Proactive Attack Surface Reduction

As more enterprise clients adopt IONIX as their External Attack Surface Management solution, we are seeing a distinct, bimodal pattern with regard to the workflows and processes used to mitigate and remediate issues. The “slow” workflow is where more planning and grooming takes place and where whole classes of findings, rather than individual ones, are addressed at a time. We now believe that this pattern is quite efficient and effective in reducing our clients’ attack surface and we want to raise awareness and discussions about it with this post.

Fast Workflow for Critical Vulnerabilities

The need for a fast path, or workflow, for handling critical alerts is obvious and well understood. Security operations and incidence response teams will handle individual critical findings such as misconfigurations, vulnerabilities, or breaches as soon as they’re discovered. In many organizations, this workflow is the sole mode of operation.

A finding (or security event) is attended to and remediated only if it reaches the top of the pile in terms of priority. Sometimes there are escalation rules based on the amount of time a ticket has been opened. Theoretically, this means any finding will eventually reach all the way to the top. However, in practice, the low-severity/urgency alerts rarely if ever get triaged or resolved. However, like in human cognition and reasoning, there’s a loss of efficiency and ultimately, increased risk in sticking to just this one approach.

Slow Workflow for Systematic Issues

In the aggregate, similar low-severity findings that are found across many assets can point to an equal or greater risk as any one critical finding. Such findings may also point to a hidden common cause that, if attended to and corrected, could prevent the occurrence of future cases of the issue.

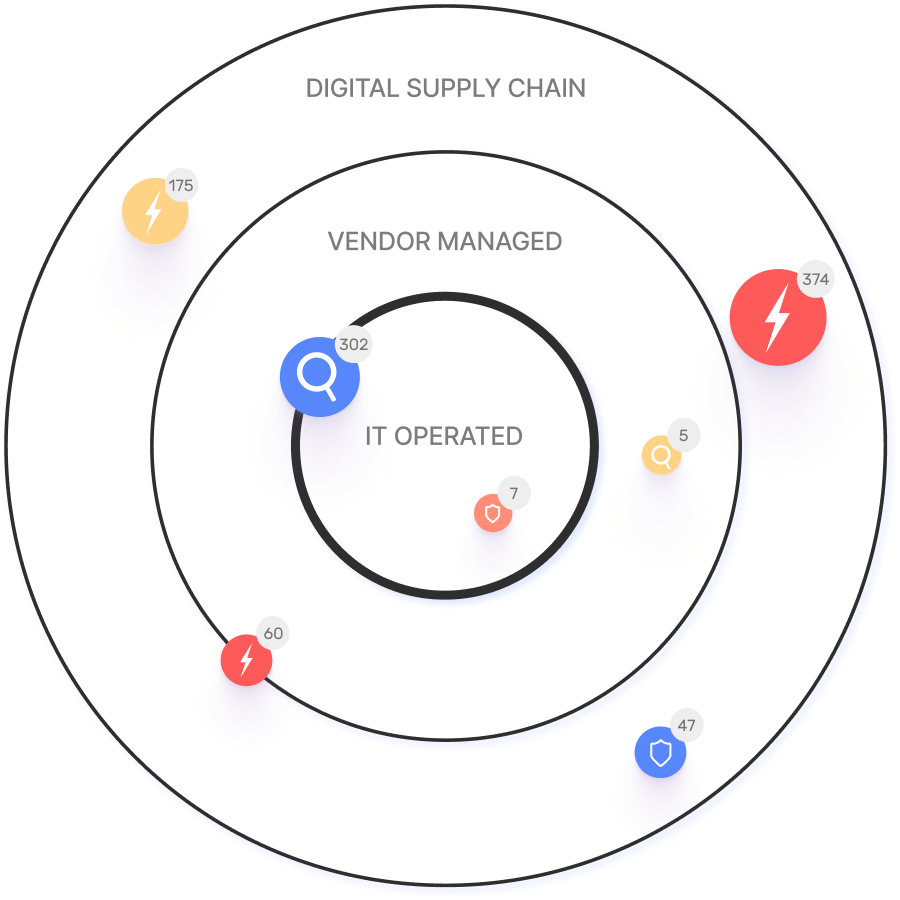

Specifically for our clients, IONIX’s attack surface management platform automatically analyzes and categorizes low-level security findings and other asset metadata to make next-best-step recommendations. The platform evaluates various indicators to assess both the use of the asset and its level of disrepair. Typically, it finds assets with various issues that have accrued over time–the use of old or obsolete components, expired certificates, weak SSL configurations–which, when considered in isolation, would never be prioritized high enough to be considered critical. This automated evaluation process also takes into account the level of connectedness these assets have to other organizational assets; the less connected they are, the more likely they will be recommended for removal. Thus, the assets it recommends for removal tend to be somewhat peripheral and isolated from the organization’s main online sites.

More often than not, the assets on the removal list have been orphaned or otherwise neglected. They are not known or monitored by the organization’s IT security teams. What the client gets in the end is a machine-curated list of fully qualified domain names (FQDNs) with the action “remove”, i.e., do not make these assets accessible (or even resolvable) from the internet.

Now, the “slow path” is the workflow in which these removal recommendations are attended to. For agile teams, removal recommendations could be accomplished in 2-4 week sprints.

The Impact of Consistent Attack Surface Management

We have clients that use this “slow” process to remove as much as 12% of the total number of FQDNs that were resolvable from the internet. Not only did the removal of these assets significantly decrease their attack surface, but also their total number of findings, especially the long tail of low-severity issues. As some of our system’s indicators for removal are also evaluated by various security rating companies, a byproduct of this process has been a marked increase in these clients’ security ratings.

Beyond the removal of orphaned assets, the slow path workflow has been used successfully to analyze the whole set of security alerts. The analysis finds patterns that point to a common cause that can then be addressed and rectified, thus proactively preventing future breaches.

How does your organization attend to low-severity findings? Does it ever attend to it? Does it analyze the data to find patterns? Let us know in the comments.