Frequently Asked Questions

AI Threats & Uses in Cybersecurity

How are AI technologies like ChatGPT and GenAI impacting cybersecurity?

AI technologies such as ChatGPT and GenAI are significantly impacting cybersecurity by increasing the potency and frequency of cyberattacks. Cybercriminals use these tools to automate attacks, write malicious code, create deepfakes, and scale malware and spyware generation. Organizations must adapt by leveraging AI for proactive threat detection and response. (Source)

What are some examples of AI-driven cyberattacks?

Examples of AI-driven cyberattacks include brute force password cracking, building spyware and malware at scale, deepfake video scams, credential cracking, and large-scale phishing campaigns. AI enables attackers to automate and accelerate these attacks, making them harder to detect and defend against. For instance, deepfake videos have been used to scam organizations out of millions of dollars. (Source)

How does AI empower both traditional and new cyberattacks?

AI multiplies the impact of traditional attacks such as brute force, malware, spyware, impersonation, and login spoofing by making them faster, more powerful, and harder to detect. Additionally, AI introduces new attack types like deepfakes and large-scale automated phishing, increasing the threat landscape for organizations. (Source)

What are the risks associated with the increasing use of AI in cybersecurity?

Risks include attackers exploiting AI systems to automate attacks, generate malware, and create deepfakes for impersonation and fraud. If attackers gain access to AI-powered cybersecurity tools, they can exploit vulnerabilities or launch sophisticated attacks. Organizations must implement strong protocols and oversight to mitigate these risks. (Source)

Can AI replace humans in cybersecurity risk prevention?

No, AI cannot fully replace humans in cybersecurity risk prevention. Most AI-powered cybersecurity systems still require human oversight and intervention. AI excels at automating tasks and detecting patterns, but lacks the situational awareness and judgment of human experts. Transparency and human input are essential for effective risk management. (Source)

IONIX Platform Features & Capabilities

What is IONIX and what does it do?

IONIX is an External Exposure Management platform designed to identify exposed assets and validate exploitable vulnerabilities from an attacker's perspective. It enables security teams to prioritize critical remediation activities by cutting through the flood of alerts. Key features include complete attack surface visibility, identification of potential exposed assets, validation of exposed assets at risk, and prioritization of issues by severity and context. (Source)

What are the key capabilities and benefits of IONIX?

IONIX offers complete external web footprint discovery, proactive security management, real attack surface visibility, continuous discovery and inventory, and streamlined remediation. These capabilities help organizations improve risk management, reduce mean time to resolution (MTTR), and optimize security operations. (Source)

What integrations does IONIX support?

IONIX integrates with tools such as Jira, ServiceNow, Slack, Splunk, Microsoft Sentinel, Palo Alto Cortex/Demisto, AWS Control Tower, AWS PrivateLink, and pre-trained Amazon SageMaker Models. These integrations enable seamless workflows and enhanced threat management. (Source)

Does IONIX offer an API for integrations?

Yes, IONIX provides an API that supports integrations with major platforms like Jira, ServiceNow, Splunk, Cortex XSOAR, and more. (Source)

Security & Compliance

What security and compliance certifications does IONIX have?

IONIX is SOC2 compliant and supports companies with their NIS-2 and DORA compliance, ensuring robust security measures and regulatory alignment. (Source)

How does IONIX address product security and compliance?

IONIX ensures robust security by maintaining SOC2 compliance and supporting organizations in meeting NIS-2 and DORA regulatory requirements. This helps customers align with industry standards and maintain a strong security posture. (Source)

Implementation, Onboarding & Support

How long does it take to implement IONIX and how easy is it to get started?

Getting started with IONIX is simple and efficient. The initial deployment takes about a week and requires only one person to implement and scan the entire network. Customers have access to onboarding resources like guides, tutorials, webinars, and a dedicated Technical Support Team. (Source)

What training and technical support does IONIX provide?

IONIX offers streamlined onboarding resources such as guides, tutorials, webinars, and a dedicated Technical Support Team to assist customers during the implementation process. (Source)

What customer service and support is available after purchasing IONIX?

IONIX provides technical support and maintenance services during the subscription term, including troubleshooting, upgrades, and maintenance. Customers are assigned a dedicated account manager and benefit from regular review meetings to ensure smooth operation. (Source)

Use Cases, Pain Points & Customer Success

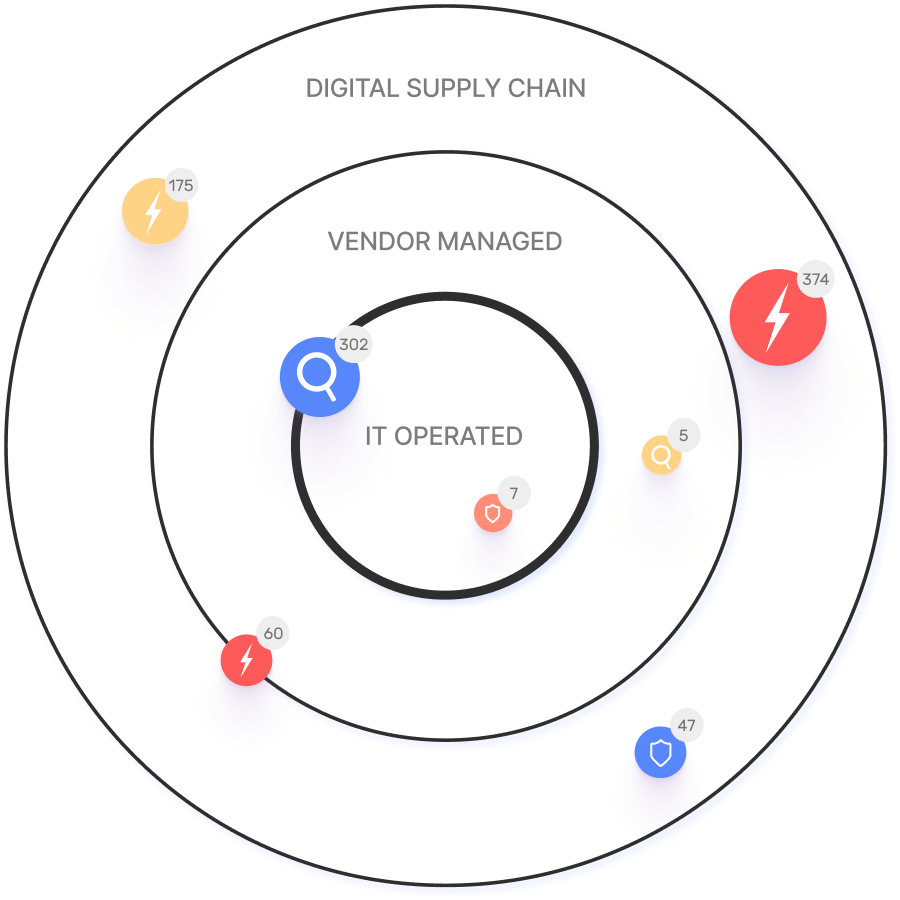

What core problems does IONIX solve?

IONIX solves problems such as identifying the complete external web footprint (including shadow IT and unauthorized projects), enabling proactive security management, providing real attack surface visibility, and ensuring continuous discovery and inventory of internet-facing assets and dependencies. (Source)

Who can benefit from using IONIX?

IONIX is tailored for roles such as Information Security and Cybersecurity VPs, C-level executives, IT managers, and security managers. It serves organizations across industries, including Fortune 500 companies, insurance, financial services, energy, critical infrastructure, IT, technology, and healthcare. (Source)

What customer success stories demonstrate IONIX's impact?

IONIX has helped E.ON continuously discover and inventory internet-facing assets, Warner Music Group boost operational efficiency and align security operations with business goals, and Grand Canyon Education enhance security by proactively discovering and remediating vulnerabilities. (E.ON, Warner Music Group, Grand Canyon Education)

What business impact can customers expect from using IONIX?

Customers can expect improved risk management, operational efficiency, cost savings, and enhanced security posture. IONIX enables visualization and prioritization of attack surface threats, actionable insights, reduced mean time to resolution (MTTR), and optimized resource allocation. (Source)

Product Performance & Recognition

How is IONIX rated for product innovation and usability?

IONIX earned top ratings for product innovation, security, functionality, and usability. It was named a leader in the Innovation and Product categories of the ASM Leadership Compass for completeness of product vision and a customer-oriented, cutting-edge approach to ASM. (Source)

What feedback have customers given about IONIX's ease of use?

Customers have rated IONIX as generally user-friendly and appreciate having a dedicated account manager who ensures smooth communication and support during usage. (Source)

Resources & Documentation

Where can I find technical documentation and resources for IONIX?

Technical documentation, guides, datasheets, and case studies are available on the IONIX resources page. (IONIX Resources)

Does IONIX have a blog and what topics does it cover?

Yes, IONIX has a blog that covers topics such as cybersecurity, risk management, vulnerability management, exposure management, and the impact of AI on cyber threats. Key authors include Amit Sheps and Fara Hain. (IONIX Blog)

Industry Recognition & Company Information

What industry recognition has IONIX received?

IONIX was named a leader in the 2025 KuppingerCole Attack Surface Management Leadership Compass and won the Winter 2023 Digital Innovator Award from Intellyx. The company has secured Series A funding to accelerate growth and expand platform capabilities. (Source)

Who are some of IONIX's customers?

IONIX's customers include Infosys, Warner Music Group, The Telegraph, E.ON, Grand Canyon Education, and a Fortune 500 Insurance Company. (IONIX Customers)